As you may have remark if you follow me, I’m a big fan of Gephi with the streaming module.

In this article, I want to share how it can be use « officially » (I mean, on the way it was designed I think) and some tricks that can be used 😉 .

Presentation Gephi Streaming

To be simple, the Gephi Streaming module allow to stream graph over HTTP(S) between 2 gephi instances. Basically, you send some JSON that say add a node, remove an edge, change a node etc… to gephi. There is a marketplace, a wiki and some articles that explain the concept and a beginning of a protocol.

If you’re using the API, you will probably ask « Why ? the Java API can do everything and more ». And I agree with you, but the API is quite confusing on the way that it’s not « simple » to boot it up for a simple project (ok I didn’t spend a lot of time on it but I tried and I wasn’t convinced).

I also bet that you (Data scientists, Data journalist, Data pornographists ,etc …) use python or ruby or scala or R or whatever other programming language. Gephi Streaming is based on HTTP(S) / REST , it means that it’s open to almost every language, as long at you have a minimum support of HTTP transaction.

It sounds also possible to share and work on live graphs (live streaming of twitter status e.g) with people all over the world via internet.

How it works, how it reacts

First look at the module after you download it, you will see that it’s quite simple and there is only 2 « mode »:

- Client : This part makes connection and synchronisation with other gephi instance possible (see Gephi to Gephi below)

- Master : Most important part, this is where you can create a master server that will received graph action and update other clients that register to it.

AS I already say, the concept of the graph streaming is to send action for nodes or edges (add, change, delete). What’s important here is that for each action, you are using an ID to refer to a gephi element (node or edge).

Gephi to Gephi

Gephi streaming allows to connect 2 gephi instances (even workspace since 0.8.2).

It’s pretty simple to put in place, let say you have 2 gephi instance running (one in 192.168.0.1 (short name One) and another in 192.168.0.2 (Shot name Two), to be « network funny »).

Idea is, a Master provide graph for a Client. So let’s completly not democraticaly decide that One is master and others are client.

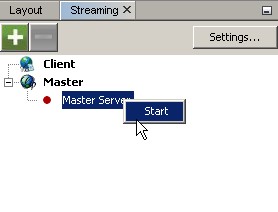

In One just go to the Gephi Streaming module, click right to Master server and Start. You’re now running a master at http://192.168.0.1:8080 (you can configure the default and even put some SSL. I assume that you are on workspace0.

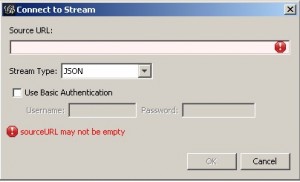

In Two now, click right on Client and Connect to Stream. A window opens, don’t worry, and enter http://192.168.0.1:8080/workspace0 and click ok. Congratulation, the 2 gephi instance are connected and if everything is working, all structural change in the Master (add, change, delete) should be reflected in the Client.

External to Gephi

One of the possibility is to send action from external tools (crawler, web-spider, script that analyse a csv etc…) to a gephi instance.To do that, just open Gephi and launch a Master Server. On tool side, just send some HTTP requests like describe here.

What’s interesing here is that you can add value to your tool by having a real-time graph representation of your data. So, instead to have a gexf temporary file, you could just use this fonctionnality to import whatever graph to gephi.

In terms of code you can check my GephiStreamer python class on github :

#GephiStreamerManager

def send(self,action,iGraphEntity):

if type(iGraphEntity) == Node or type(iGraphEntity) == Edge:

postAction = {action:iGraphEntity.object}

else:

postAction = {action:iGraphEntity}

params = json.dumps(postAction) # Create a json that respect the message form

aSendURL = self.name() #http://somehost:someport/someworkspace?operation=updateGraph

r= requests.post(aSendURL, data=params) # Send a Post Requests. If Streamerlaunched, It should display element.

Another example in Java:

public void sendAction(IGephiStreamAction a) throws Exception

{

OutputStreamWriter writer = null;

URL url = new URL(this.getTargetWorkspaceInstance()+"?operation=updateGraph");

URLConnection conn = url.openConnection();

conn.setDoOutput(true);

writer = new OutputStreamWriter(conn.getOutputStream());

writer.write(a.getJson()); //e.g {"an":{"A":{"label":"Streaming Node A","size":2}}}

writer.flush();

conn.getInputStream();

}

I think you got the point now ;).

What is nice is the « handeling of errors » which is quite permissive :

- If you define twice a node, it won’t do anything

- If you define a edge with a node that doesn’t exist, it won’t do anything

- If you update a node that doesn’t exists, it won’t do anything

You have to take care about all the special cases, but I find it quite practical to not be psycho-rigid 😉

I use this technics to convert almost everything to a gephi graph (Data Handcrafted in txt file, log server information converted live with shell scripts etc…) .

Architecture

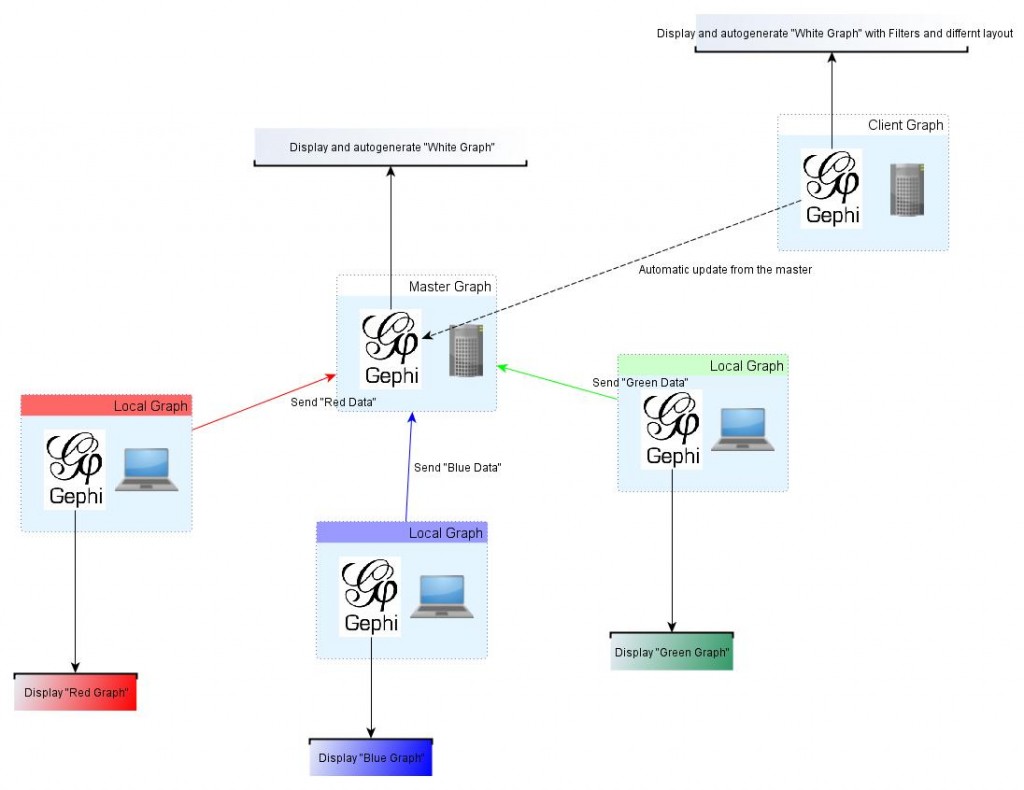

By knowing these two behaviors, we can think about a streaming architecture where software are talking to gephi that are talking to other gephi.

Simple exemple, we saw that in Gephi to Gephi that a Client can register to a Master. But you can register as many Masters as you want. So the Client can compile 2 differents graph at the same time.

This leads to a open idea of collaborative network analysis and generation. 1 year ago, I’ve done some experiment at my university. With one topic given (websphere of « abortion » (I would prefert lol cats, but teachers are grumpy in France 😀 )) , half where crawling pro-website and other half anti-website.

Architecture used here is simple, each working-pair uses ArrowV (crayzay advertising isn’t it ?), a command line web crawler, on their computer that have Gephi running as a Master. We also have a computer used by nobody but that also run Gephi as a Master (which is the super master). Each pair configures the crawler to stream their crawling result to their local gephi and to the super master gephi. So that, they can have a view to what they crawled in their localhost and the super master gephi has the whole view of everyone compiled in one graph.

The result was nice to see that 15 people in 30 min are able to map a quick web map of one topic. Ok wasn’t perfect and still some works to do, but it was a good proof of concept.

It raises a lot of (new) questions and constrains, but it opens gate to powerfull tools that smartly mix technology & human.

That’s all for the moment. I’ll try to keep this article up-to-date if new stuff appears.